VMware’s latest release of the vSphere virtualization suite, version 6.5, changes how they handle vNUMA presentation to a virtual machine, and there’s a high likelihood that it will break your virtualized SQL Server vNUMA configuration and lead to unexpected changes in behavior. It might even slow down your SQL Servers.

VMware’s latest release of the vSphere virtualization suite, version 6.5, changes how they handle vNUMA presentation to a virtual machine, and there’s a high likelihood that it will break your virtualized SQL Server vNUMA configuration and lead to unexpected changes in behavior. It might even slow down your SQL Servers.

Here’s what you need to do to fix it.

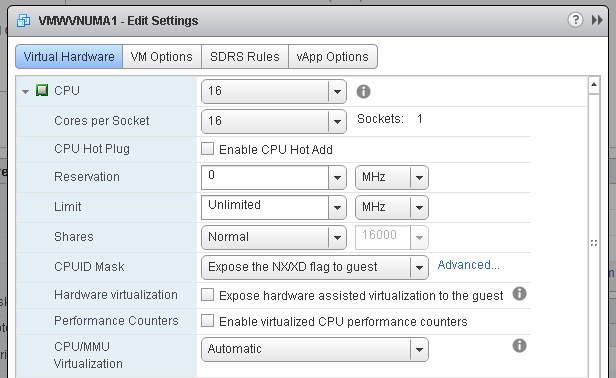

By default, for the last few releases you have been able to set the vNUMA, or virtual CPU sockets and cores configuration, appropriately on the VM through the basic CPU configuration screen.

With this setting on versions of vSphere between 5.0 and 6.0, regardless of the physical server CPU NUMA configuration, your virtual machine would contain one virtual CPU socket and 16 CPU cores. You had the serious potential to misalign your VM with the physical CPU NUMA boundaries and cause performance problems.

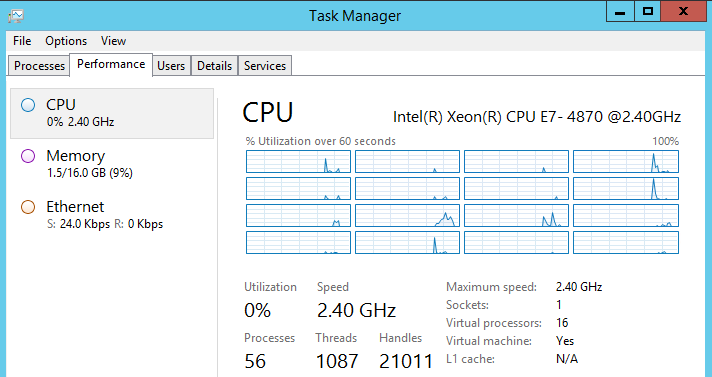

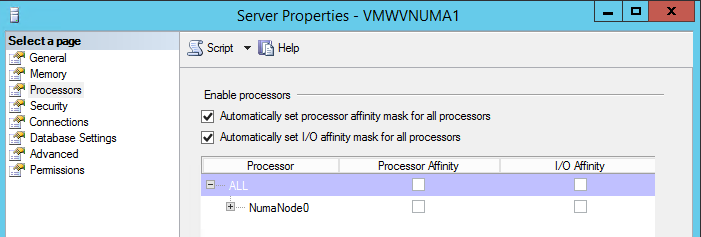

By default on vSphere 6.5, these settings are ignored. Look at SQL Server when you power on this VM.

The hypervisor picked up that the host hardware contained 10-core CPUs, and automatically adjusted the vNUMA setting to a 2×8 configuration in an attempt to improve performance.

I appreciate their attempt to improve performance, but this presents a challenge for performance-oriented DBAs in many ways. It now changes expected behavior from the basic configuration without prompting or notifying the administrators in any way that this is happening. It also means that if I have a host cluster with a mixed server CPU topology, I could now have NUMA misalignments if a VM vMotions to another physical server that contains a different CPU configuration, which is sure to cause a performance problem.

Worse yet is that if I were to restart this VM on this new host, the hypervisor could automatically change the vNUMA configuration at boot time based on the new host hardware.

I now have a change in vNUMA inside SQL Server. My MaxDOP settings could now be wrong. I now have a change in expected query behavior.Â

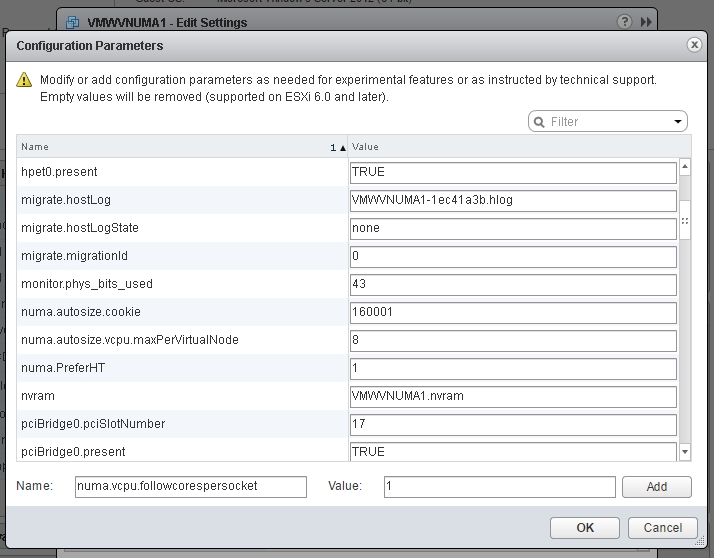

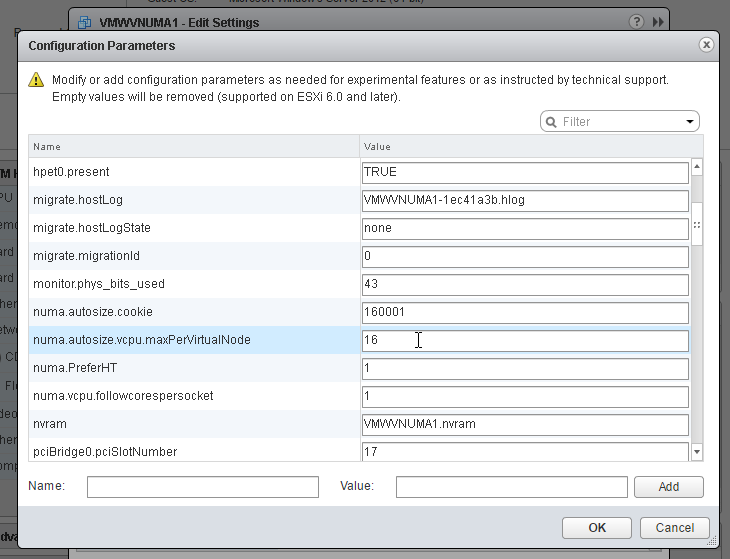

To have maximum control over the expected behavior of this SQL Server VM (and you are free to slow down the VM by forcing settings on this VM if you are not careful), you can adjust this behavior in the following ways. The VM must be powered off and certain advanced parameters entered into the VM configuration. Edit the VM settings, go to VM Options, expand Advanced, and click ‘Edit Configuration’ next to Configuration Parameters.

Now you need to think a little bit here. How many CPUs do you want total, and how many NUMA nodes do you want presented to the virtual machine?

Add the following configuration parameter: numa.vcpu.followcorespersocket = 1.

Change the configuration value ‘cpuid.coresPerSocket’ to the number of vCPUs you want present on one CPU socket.

Set the number of max CPUs per NUMA node to this same value with the parameter numa.autosize.vcpu.maxPerVirtualNode.

Voila. You’re back to expected vNUMA behavior.

More details on these parameters are found here, and more advanced parameters are found here. Validate your performance before and after you make any of these changes so you don’t slow something down, and work with your infrastructure admins so you know exactly what physical CPU NUMA topology you have underneath your virtualized SQL Servers and what changes you should make with these settings.

Hi David!

I’d like to make some clarifying statements here:

First, I’d suggest the use of the word “break” is click-bait. I’d suggest something more like: “Heads Up – vNUMA behaviour changes in vSphere 6.5”

Next, Yes – vNUMA is vSphere 6.5 was enhanced to ensure the VM was presented the best vNUMA topology the first time the VM was powered on.

In earlier versions of vSphere, we already did this automatically when corespersocket was = 1 so this behaviour has not changed. Additionally, vNUMA is only evaluated once and set to be static at the first power on of the VM or if the vCPU count changes of that VM.

We do not randomly re-evaluate the vNUMA topology and it will stay static ‘unless’ you tell it otherwise (by moving away from defaults and using advanced settings like autosize). So functions like: power off/on, restart and vMotion DO NOT change the vNUMA topology after its been set. This is true for any version of vSphere including 6.5.

What we did change in vSphere 6.5 is that now you will always be presented with the optimal vNUMA topology regardless of that you set for corespersocket. This is good change and a performant one based on many customers cases in which they selected sub-optimal VM sizes.

Lets look at an example:

On a 2 socket, 10 core ESXi host you want a 12vCPU VM and can do the following:

12 vSockets x 1 vCores (aka corespersocket =1)

– vSphere 6.0 presents 12 sockets to the guest OS and 2 vNUMA nodes

– vSphere 6.5 presents 12 sockets to the guest OS and 2 vNUMA nodes

– Mark’s take: optimal vNUMA topology for either version, poor socket count for guest OS licensing

1 vSocket x 12 vCores

– vSphere 6.0 presents 1 socket and 1 vNUMA node

– vSphere 6.5 presents 1 socket and 2 vNUMA node

– Mark’s take: sub-optimal vNUMA topology in 6.0, optimal vNUMA topology in 6.5, okay socket count for guest OS licensing

2 vSockets x 6 vCores

– vSphere 6.0 presents 2 sockets and 2 vNUMA nodes

– vSphere 6.5 presents 2 sockets and 2 vNUMA nodes

– Mark’s take: This is the best & right option for both versions

6 vSockets x 2 vCores

– vSphere 6.0 presents 6 sockets and 6 vNUMA nodes

– vSphere 6.5 presents 6 sockets and 2 vNUMA nodes

– Mark’s take: sub-optimal vNUMA topology in 6.0, optimal vNUMA topology in 6.5, poor socket count for guest OS licensing

So what’s right? (aka best config for guest OS licensing AND performance)

2 vSockets x 6 vCores

So those examples outline that picking the right-size for the VM in terms of guest OS licensing and performance still needs to be manually determined. This will be addressed in future releases of vSphere.

But what we have done in vSphere 6.5 is to automate the right vNUMA topology selection under all conditions, not just when corespersocket=1, which will assist customers when they make a sub-optimal choice. It still does not excuse a bad presentation of vSockets and vCores.

When you upgrade though from 6.0 to 6.5 we DO NOT change the vNUMA topology, again, unless you have used advanced settings. We don’t want to change a configured workload that you have already made. This will only apply to new workloads or those that change vCPU counts.

I hope this helps add clarity to the enhancements.

Happy to hear from folks with feedback & comments (good/bad or otherwise): @vmMarkA

Mark! Great to hear from you! I agree with a lot of what you state in your comments here. However, your comments are from a VM administrator’s perspective. As a DBA, especially one with highly tuned high performance workloads, this change could negatively impact some of these workloads. For example, a new feature in SQL Server 2016 called automatic soft NUMA will detect the presence of core counts per socket, and when they are greater than eight, it reserves the right to subdivide a NUMA node into smaller NUMA subnets. If the base functionality of the front-end configuration is different than what is actually presented to the VM, my means to present smaller vNUMA configurations to tune for the fastest performance might not work. This post was designed to make people aware of the changes, show them how to adjust these settings, and let them evaluate and test for themselves which of these options presents the fastest possible configuration for their workloads.

And yes, the title was meant to draw attention 🙂 But… I’ve already had six workloads that were negatively impacted by these changes in production already that I had to go back and fix in the last week.

I’d like to learn more about your customers that were impacted and the details of the scenarios to ensure we do everything we can to prevent that. I suspect advanced settings at play. When you have a moment – reach out – I believe I’m your rolodex.

Cheers, vmMarkA

David, your headline is, shall we say, very sexy. Here I am ogling it, and commenting. Sadly, the article could use some technical accuracies, in my very humble opinion.

The new vNUMA behavior does NOT “break” your SQL, unless by “break” you meant to say that Microsoft SQL administrators’ previous knowledge (and, yes, assumption) of the vNUMA feature of vSphere needs to be “broken” – in which case, I’d say that we are in complete agreement.. The new behavior addresses a specific problem – administrative manual errors in setting the appropriate virtual socket/cores-per-socket combinations which results in sub-optimal allocations to CMs and, therefore, induces performance degradation that is then used to buttress the assumption that virtualization is not suitable for “critical applications”.

The vNUMA change in vSp Here 6.5 attempts to redress these administrative “fat finger” by ignoring the manual GUI-based socket/cores-per-socket combo when the hypervisor determines that the manual setting is radically inconsistent with the physical NUMA architecture. It attempts to “unbreak” what many administrators have been unintentionally “breaking” when they get their vCPU presentation wrong.

While we recognize that there is still room for improvement, your readers will appreciate knowing that they don’t need the VM advanced configuration options you mentioned in this article to achieve the desired outcome you presented. They can keep things simple by simply sizing their VMs based on the physical NUMA architecture of their hardware AND in line with the technical and licensing restrictions of their Microsoft SQL Server editions.

As you are aware, our previous guidance that recommends leaving the “corespersocket” count at the default value of 1 works like a charm, except for the Express and Standard editions of Microsoft SQL Server. This is one of the reasons why we are asking customers to take a different approach to.vCPU allocation, not just on vSphere 6.5, but on previous vSphere versions as well. We are counting on experts like you to help us convey the “Know Your Hardware. Size To Your Hardware” message to the community. The vNUMA changes in 6.5 is a “change”, and changes can be confusing. What is does not do is “break” anything in SQL.

Deji, thank you for the solid response. I respect your counterpoint here, and I’ll be posting a follow up soon. This change on 6.5 was not evident in the documentation and already changed expected behavior in some newly deployed SQL Servers on a freshly upgraded vSphere platform. The change in behavior was perceived by the client as breaking their expectations on behavior, hence the name. I’ll be following up with a point-counter-point on this, and will be talking about the scenarios that I’ve seen the change in behavior.

Hi All,

This question is really for vmMarkA.

I am confused about the example you gave above.

To recap, the scenario is: 2 socket, 10 core ESXi host. There is a 12vCPU VM

“1 vSocket x 12 vCores

– vSphere 6.0 presents 1 socket and 1 vNUMA node

– vSphere 6.5 presents 1 socket and 2 vNUMA node

– Mark’s take: sub-optimal vNUMA topology in 6.0, optimal vNUMA topology in 6.5, okay socket count for guest OS licensing”

In the vSphere 6.0 example, you show only 1 vNUMA node. If the physical socket on the host only has 10 cores, how can only 1 socket be presented to a 12 vCPU VM? Additionally, it seems like you’d have to cross over to the second, physical NUMA node on the host when you have more vCPUs than cores on the socket. Were you noting 12 physical cores on the host and not accounting for Hyper-Threading? In that case, I can see how this would work, as you’d have 20 logical cores on the socket and I can see how a 12 vCPU VM could stay on one physical socket and in one physical NUMA node.

Also, your previous comment contradicts your previous article, http://blogs.vmware.com/vsphere/2013/10/does-corespersocket-affect-performance.html, where you said, “Operating systems should be fine and rediscover changed NUMA topology after reboot.” Above you said, “So functions like: power off/on, restart and vMotion DO NOT change the vNUMA topology after its been set.” It would be good to update the previous article. 🙂 I tried to post to it but comments are closed. Also, thank you for writing the first article and also chiming in with some detailed information. Much appreciated!

Your preferred configuration here of 2 vSockets and 6 vCores also contradicts your previous advice from the above article. Previously, you noted, “keep your configuration to 1 core per socket UNLESS you need to change because your Windows Server license restricts you to a limited number of sockets. This guidance applies to vSphere 5.0+ which was when we introduced our vNUMA feature.” In David’s example, I believe he’s running on a Windows Server OS so he should be able to run a lot of sockets. Therefore, we can rule out the Windows Server license restriction caveat. Therefore, that would mean your previous advice would be to configure this 12 vCPU VM with 12 virtual sockets. Could you help me understand how your opinion has evolved?

Thanks again!

Richard

I would also like to know the answers to rhoffman14’s question above. I need to get a design done for a client regarding this ASAP. Thanks!

Richard,

I will let Mark comment on his previous statements but what Mark says is true, You can present a virtual NUMA topology that spans a physical Topology. Something you do not want to do, but it’s possible.

By default the NUMA client configuration counts only cores, but with an additional advanced setting, you can have the hypervisor count HTs instead of cores to reduce the footprint of the NUMA client. There is a difference in creating a NUMA node and the actual scheduling of threads on the physical environment. It’s the CPU scheduler that decides whether the vCPU is able to consume a logical processor (HT) or a full core, this depends on its relative entitlement, its progress and the overal consumption level of the physical system.

When using the Cores per Socket setting, the NUMA scheduler does not update the “cookie” that describes the virtual NUMA topology, when vMotioning the VM, the virtual NUMA topology will not be updated to align with the physical CPU topology if the VM is vMotioned to a different host configuration.

If you want to read more about the behavior of the ESXi scheduler and specifically about this update, I would recommend to read my article: http://frankdenneman.nl/2016/12/12/decoupling-cores-per-socket-virtual-numa-topology-vsphere-6-5/

Frank, thanks for chiming in. Your vNUMA series are legendary, and the post you reference is great.

I wanted to briefly say thank you for the post, Frank. I will dig into these details after the holidays. Mark advised that he would try to respond then too.

Cheers,

Richard

Here’s a clarifying article:

https://blogs.vmware.com/performance/2017/03/virtual-machine-vcpu-and-vnuma-rightsizing-rules-of-thumb.html

Richard

(somehow lost track of this promised response – apologies for my tardiness)

quote:

“In the vSphere 6.0 example, you show only 1 vNUMA node. If the physical socket on the host only has 10 cores, how can only 1 socket be presented to a 12 vCPU VM? Additionally, it seems like you’d have to cross over to the second, physical NUMA node on the host when you have more vCPUs than cores on the socket. Were you noting 12 physical cores on the host and not accounting for Hyper-Threading? In that case, I can see how this would work, as you’d have 20 logical cores on the socket and I can see how a 12 vCPU VM could stay on one physical socket and in one physical NUMA node.”

Remember that NUMA presentation can be different than sockets x cores. While we tend to mutually tie them together they are separate. Another (old) example is AMD Magny Cours which was actually two NUMA nodes per physical socket.

So yes the VM would see 1 vSocket, 10 vCores and a single vNUMA node. Underlying our ESXi schedulers would try and cope with this sub-optimal scenario mapping it across 2 pSockets and 2 pNUMA nodes. Hyper-threading is not accounted for her as our default policy will be to use the actual pCores first and cross pSockets ‘before’ using Hyper-threads.

quote:

“Your preferred configuration here of 2 vSockets and 6 vCores also contradicts your previous advice from the above article. Previously, you noted, “keep your configuration to 1 core per socket UNLESS you need to change because your Windows Server license restricts you to a limited number of sockets. This guidance applies to vSphere 5.0+ which was when we introduced our vNUMA feature.â€

Yes – that’s right. The old article (almost 4 years ago) focused on getting vNUMA right at the expense of a sub-optimal socket x core presentation. At that time, the socket x core presentation was less valuable as compared to vNUMA, but since, OS’s and applications have evolved to need the right presentation for maximum performance. Hence my new article to supercede it (above).