A wonderful gentleman who attended my SQL PASS Summit session this year recently emailed me and asked me a great question about optimal setup for SQL Server virtual machine vCPU and vNUMA configurations. I thought about the question and realized that while the session went into a light level of detail, I can go much deeper here on my blog.

While the examples and screenshots in this post are all from my VMware vSphere lab environment, the same concepts directly apply to all other hypervisors on the market.

First, what is NUMA?

NUMA, or non-uniform memory access, is an architectural approach in computer hardware design that places banks of memory in close proximity to each physical CPU socket. It adds efficiency to the system as CPU counts and memory capacity increase, which in turn improves performance. The proximity of the memory bank is extended into the operating system so that applications can be coded for workload efficiency. SQL Server is NUMA aware, and therefore takes advantage of this awareness. More information on NUMA can be found over at Wikipedia.

The trick with NUMA and virtual machines is to determine how to construct a virtual machine to take advantage of NUMA when performance truly counts. Some virtual machines are somewhat idle and not resource intensive at all, and these are generally less impactful with the performance tuning. However, other virtual machines are absolute resource hogs and consume quite a bit of resources to satisfy their workload requirements. These workloads will certainly benefit from NUMA.

With VMware vSphere, the ability to extend these NUMA details into a virtual machine was first brought to us in vSphere 5.0. Prior to this point, you could just select the number of vCPUs that a VM was assigned, and the hypervisor was left to balance the CPU and memory requests.

Host Physical Machine

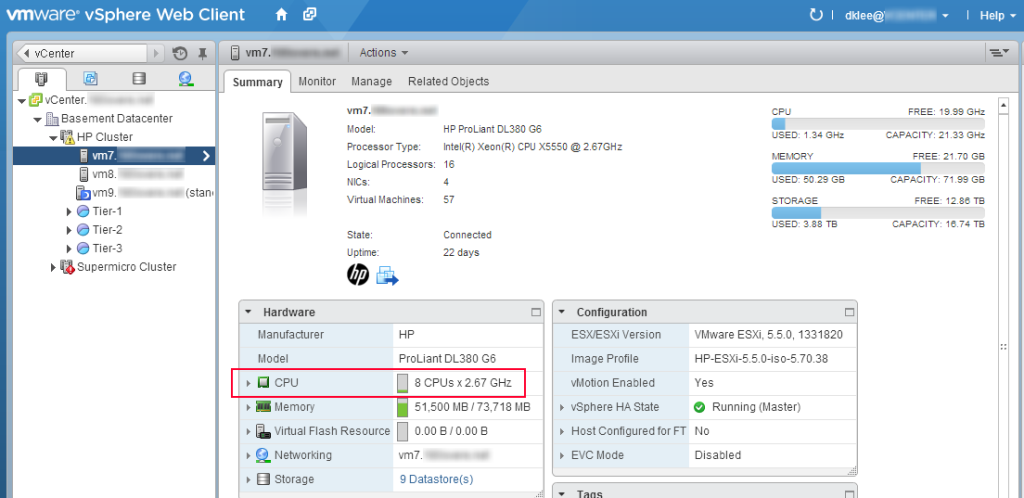

The modern physical servers in a virtualization server farm all have individual NUMA configurations if they have more than one CPU socket. From my home lab, the vCenter management interface tells me the CPU configuration of the physical server.

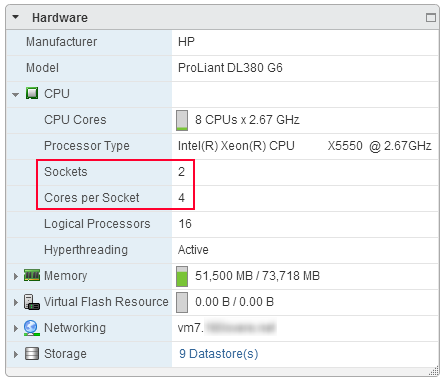

You can see that one of my home lab servers, an HP DL 380 G6, has eight Intel Xeon X5550 CPUs. If you drill into the setting a little further, you can see that I have two CPU sockets, each with a quad core chip in it, with Hyperthreading enabled.

So, the NUMA information is one socket with four physical CPU cores per bank of memory. A quick Google search will show you that the motherboard from this server has two banks of memory located on either side of the CPUs. The server contains 72GB of memory, so each NUMA node also contains half of this amount, at 36GB of memory.

Excellent. We now have our NUMA parameters that we can design around.

Virtual Machine Sizing

If the virtual machine is small but the workload is ideally sized within one of these NUMA nodes, keep the size the virtual machine small so it fits! The hypervisor will keep the virtual machine CPU and memory tasks confined to one physical NUMA node and the performance will benefit.

If the virtual machine will not fit inside one NUMA node, balance it.

For example, my SQL PASS Summit demonstration virtual machines were configured for six vCPUs because I had “right-sized” them according to the SQL Server workload I was running on them.

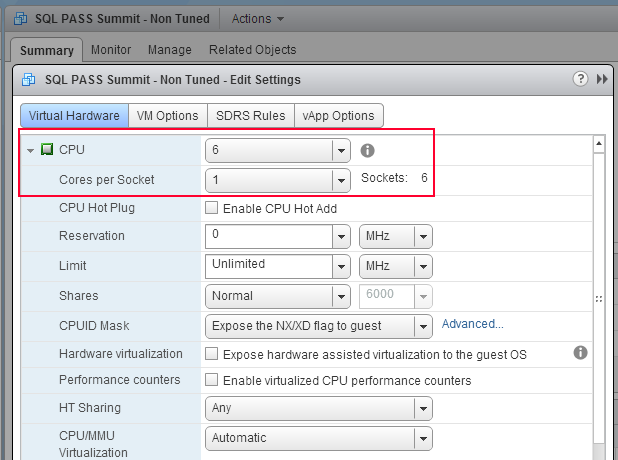

For the non-tuned virtual machine, the default CPU configuration at VM creation time is to create six virtual CPU sockets, each with one virtual CPU core. You can see this by editing the VM configuration.

This configuration means that the CPU and memory requests are free to jump around the NUMA nodes, which potentially leads to performance degradation because of the inherent inefficiency in these transitions.

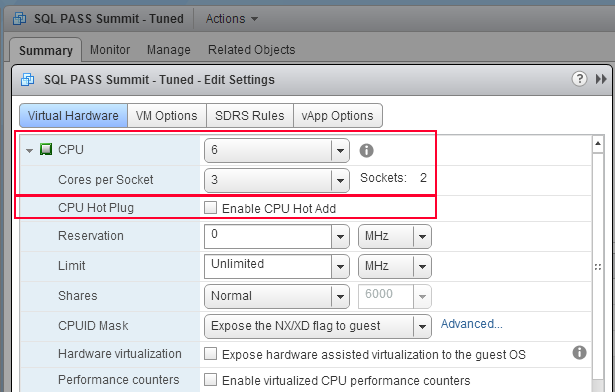

On the flip side, the tuned virtual machine configuration specified two virtual CPU sockets, each with three virtual CPU cores. This configuration helps the workload fit within the two nodes with room to spare for other processes.

I also explicitly did not enable CPU hot add. If you enable this, under no circumstances will NUMA be extended into the virtual machine, even if the other configuration components are set properly.

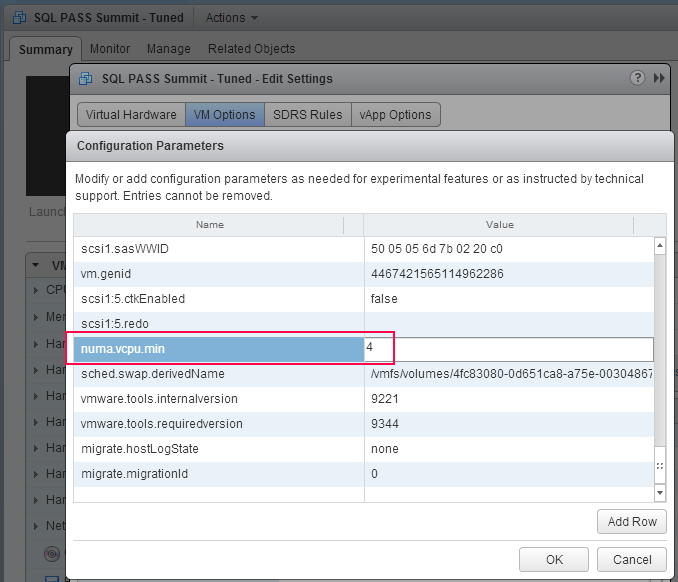

Finally, by default VMware vSphere will only extend NUMA into the virtual machine when the vCPU count is greater than eight. If you add the advanced VM configuration parameter numa.vcpu.min and set the value to the number of vCPUs of the VM (in this case four), you can see this within the operating system of the virtual machine.

More details on this setting can be found in the whitepaper Performance Best Practices for vSphere 5.5 on page 44.

Now you know how to set vNUMA settings within VMware vSphere. All other hypervisors have their methods to do the same thing. Understand your environment and you can more efficiently tune your mission-critical workload virtual machines for performance!

Trackbacks/Pingbacks